Powering Tomorrow: Why Our Grid Needs Chance-Informed Decision-Making

By Dr. Sam Savage, Executive Director

and

Daniel Krashin, Chair of Renewable Energy Applications

ProbabilityManagement.org

What’s Not to Like

What’s not to like? Free energy from the sun and wind have arrived just as the demand for power skyrockets to feed our electric cars, and giant data centers toiling away on the production of crypto currency and artificial intelligence.[i] The good news is that there is plenty of renewable energy to go around. The bad news is that it can only be used if it arrives at the right time at the right place.

The Right Time

The “right time” problem is that renewable energy is generated at random times determined by Mother Nature. Solar power generated at midday does not help power air conditioners in the evening when it’s still hot. This problem can be solved fairly quickly through energy storage with battery systems of different sizes.

The Right Place

The “right place” problem will take more time.

According to NPR,

“So many people want to connect their new solar and wind projects to the grid right now that it's creating a massive traffic jam. All those projects are stuck in line: the interconnection queue.”[ii]

With carefully planned expansion of our power grid this problem will be conquered. However, it will take a while to re-engineer our power grid given that it could cost as much as the combined value of Google and Amazon, plus the need for thousands of skilled electrical engineers. In the meantime, we must figure out how to keep our grid running smoothly, ensuring it delivers stable, clean, and affordable energy.

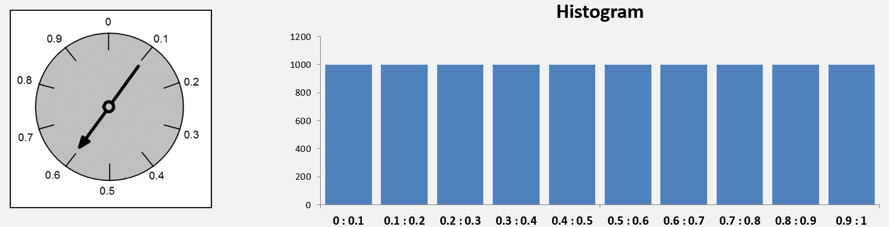

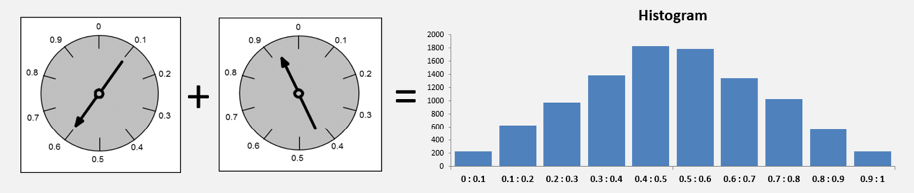

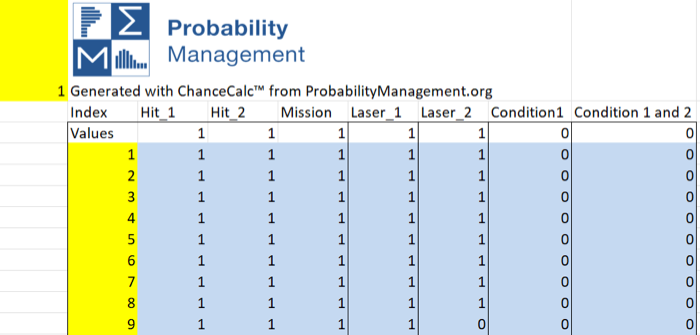

The Right Communication of Uncertainty

What do all these challenges have in common? Uncertainty. In fact, from long term investment decisions to hourly operational decisions, chance-informed analysis of uncertain power demand and prices will separate the winners from the losers and the neighborhoods with electricity from the neighborhoods with outages. How will such uncertainties be communicated and managed? Our bet is SIP Libraries that take advantage of the discipline of probability management.[iii] For investment decisions they may be updated monthly, for operational decisions they might be updated by the minute.

The Right Analysis

Many of the required analytical techniques such as portfolio and option theory have already evolved in finance and are even more applicable here. Why? The renewable energy market is still young and inefficient, providing significant opportunities for capitalizing on investments, especially in energy arbitrage.

What will the correct analysis accomplish?

Identify locations with the highest potential for long-term profitability.

Optimize installed capacity to maximize return on investment (ROI).

Construct financial plans that accelerate the achievement of the break-even point.

Demonstrate the impact of policy changes on outcomes.

Provide transparent analysis of the effects from climate disasters or system failures.

Want to learn more?

Download Models at ProbabilityManagement.org - Renewable Energy

References:

[i] https://www.washingtonpost.com/business/2024/03/07/ai-data-centers-power/

[ii] https://www.npr.org/2023/05/16/1176462647/green-energy-transmission-queue-power-grid-wind-solar

[iii] https://en.wikipedia.org/wiki/Probability_management

Copyright © 2024 Sam L. Savage